• F values : Is the ratio of the between sum of squares to the within sum of squares of variable.

• Wilks’ Lambda: Is the ratio of the within sum of squares to the total sum of squares for the entire set of variables in analysis. Wilks’ Lambda varies between 0 to 1. Also called U statistics.

• Classification matrix: Is a matrix that contains the number of correctly classified and misclassified cases.

• Hit Ratio: Percentage of cases correctly classified by the discriminant function.

The DISCRIM Procedure

• PROC DISCRIM can be used for many different types of analysis including

• canonical discriminant analysis

• assessing and confirming the usefulness of the functions (empirical validation and crossvalidation)

• predicting group membership on new data using the functions (scoring)

• linear and quadratic discriminant analysis

• nonparametric discriminant analysis

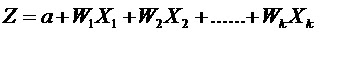

Discriminant Function

Linear discriminant analysis constructs one or more discriminant equations (linear combinations of the predictor variables Xk) such that the different groups differ as much as possible on Z.

Where,

Z = Discriminant score, a number used to predict group membership of a case

a = Discriminant constant

Wk = Discriminant weight or coefficient, a measure of the extent to which variable Xk discriminates among the groups of the DV

Xk = An Independent Variable or Predictor variable. Can be metric or non-metric.

Number of discriminant functions = min (number of groups – 1, k).

k = Number of predictor variables.

Discriminant Function : Interpretation

• The weights are chosen so that one will be able to compute a discriminant score for each subject and then do an ANOVA on Z.

• More precisely, the weights of the discriminant function are calculated in such a way, that the ratio (between groups SS)/(within groups SS) is as large as possible.

• The value of this ratio is the eigenvalue

• First discriminant function Z1 distinguishes first group from groups 2,3,..N.

• Second discriminant function Z2 distinguishes second group from groups 3, 4…,N. etc

Note : Discriminant analysis uses OLS to estimate the values of the parameters (a) and Wk that minimize the Within Group SS.

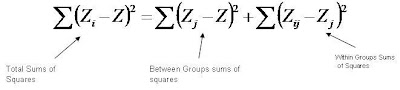

Partitioning Sums of Squares in Discriminant Analysis

In Linear Regression:

• Total sums of squares are partitioned into Regression sums of squares and Residual sums of squares.

• And Goal is to estimate parameters that minimize the Residual SS.

In Discriminant Analysis:

• The Total sums of squares is partitioned into Between Group sums of squares and Within Groups sums of squares

Where,

i = an individual case,

j = group j

Zi = individual discriminant score

Z = grand mean of the discriminant scores

Zj = mean discriminant score for group j

Here, Goal is to estimate parameters that minimize the Within Group Sums of Squares