Data preparation for the Analysis

Preparing data for Discriminant Analysis is an important step.

Missing Imputation and normality of dependent variable

All the independent variables and the dependent variable that go into the model should not have any missing values. If the dependent variable has a missing value for an observation, which is rarely the case, then it should be discarded.

For the independent variables, the missing values are replaced / imputed. Some of the commonly used imputation techniques include

- Replacing by median,

- Replaced by mode,

- Replaced by 0

- Replaced by other logical values.

Median is preferred to mean because it is not impacted by extreme values. Mode is used when the variable is discrete or categorical, where the mode is the most frequently occurring value. 0 is mostly used for indicator/dummy/binary variables. Other logical values can also be used based on their business implications.

Outlier Treatment

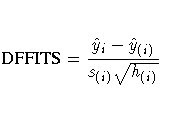

A single observation that is substantially different from all other observations can make a large difference in the results of the Discriminant analysis. If a single observation (or small group of observations) substantially changes the results, one would want to know about this and investigate further.

High values are known as upper outliers and low ones are known as lower outliers. Such values should be modified or else they would bias the estimation of the model parameters. The most simple and commonly used outlier treatment technique is by capping the values which are above 99% or below 1% of the population. This means that if a value is above the 99th percentile then it is replaced by the value corresponding to the 99th percentile. Similar capping is done for values below 1st percentile.

Use the entire data for identifying attributes pertaining to group differences and comparison.

Training & Validation data

Once the data preparation is done, the entire population is split into modeling and validation population. This split is done in a random way, so that the distribution of dependent/class variable for both these samples is roughly the same. It is assumed that the characteristics of the independent variables would be similar in the two samples, as it is a random split. The modeling population is used to build the model and then it is implemented on the validation population. The performance of a model should be similar in both modeling and validation samples.

- Split the Sample data into two data sets viz. Training sample and validation/Holdout sample

- Split ratio can be 50-50 or 60-40 depending on the sample size.

- Ensure that equal proportion of the grouping variable (categorical variable) has been put under Training and Holdout samples.

Training sample: The data set used to compute the Discriminant function.

Validation/Holdout sample: The data set used to validate the accuracy of classification (prediction) based on the function computed using the training data set.

Selection of variables for analysis(reduction of variables)

- Dependent variable: The grouping variable could be >= 2 group

- Independent variable: Selected based on

a) Apriori Business understanding or logic.

b) Ensure there is no multicollinearity among the selected independent variables.

c) Stepwise Discriminant Analysis

a. Could be performed to understand the set independent variables (discriminators) having high discriminating power among the set of large independent variables.

b. The selection of the independent variables in the function is based on the individual F-value. Hence, check for the independent variable(s) that have been excluded from the Discriminant function as the data was not supporting but, the variable still makes logical sense.

c. SAS Code:

PROC STEPDISC data = <libname.data set-name> ;

CLASS < grouping variable>;

VAR;

RUN;

CLASS < grouping variable>;

VAR

RUN;

For details about STEPDISC Procedure Options, please see the link below:

Discriminant Analysis (Direct Method)

Once the first cut variable selection has been done using Stepdisc, one can use proc discrim for Discriminant function

PROC DISCRIM DATA= <libname.data set-name> POOL= YES CANONICAL CROSSVALIDATE ANOVA MANOVA LIST OUTSTAT = <filename>;

CLASS < grouping variable>;

VAR <Independent variables>;

PRIORS PROP;

RUN;

CLASS < grouping variable>;

VAR <Independent variables>;

PRIORS PROP;

RUN;

Some useful options and their meaning:

- PROC DISCRIM = Procedure for Linear Discriminant Analysis

- PROC STEPDISC = Procedure for Stepwise Discriminant Analysis

- DATA = Data set

- POOL = YES : To use the pooled covariance matrix in calculating the Discriminant function

ü POOL=NO | TEST | YES

Determines whether the pooled or within-group covariance matrix is the basis of the measure of the squared distance. If you specify POOL=YES, PROC DISCRIM uses the pooled covariance matrix in calculating the (generalized) squared distances. Linear discriminant functions are computed. If you specify POOL=NO, the procedure uses the individual within-group covariance matrices in calculating the distances. Quadratic discriminant functions are computed. The default is POOL=YES. When you specify METHOD=NORMAL, the option POOL=TEST requests Bartlett’s modification of the likelihood ratio test (Morrison 1976; Anderson 1984) of the homogeneity of the within-group covariance matrices. The test is unbiased (Perlman 1980). However, it is not robust to non-normality. If the test statistic is significant at the level specified by the SLPOOL= option, the within-group covariance matrices are used. Otherwise, the pooled covariance matrix is used. The discriminant function coefficients are displayed only when the pooled covariance matrix is used.

- CANONICAL : Performs canonical Discriminant analysis

- CROSSVALIDATE: Classifies each observation in the data set by computing a Discriminant function from the other observation in the data set, excluding the observation being classified (i.e. leave one-out classification)

- ANOVA: Displays univariate statistics

- MANOVA: Displays multivariate statistics

- LIST: Displays the Hit ratio (correct classification) based on Discriminant function computed using the entire data set.

- OUTSTAT: To save the calibration information (Discriminant function)

- CLASS: Grouping variable

- VAR: Set of independent variables

- PRIORS PROP: specifies the prior probabilities of group membership based on probabilities proportional to the sample size.

Reference: